mind the gap

There’s a big gap between what AI systems have promised and delivered.1 It’s half because we love to project hopes on new technology, and half because there are just necessary infrastructural changes for these agents to be actually be effective. These questions arise: Where is all this money going? When does it work for me?

Despite the hype and its subsequent fatigue, I still think the technology is very special, magical even.2 think it could be positively transformative for everyone. So what is necessary to actually fulfill the promises made?

This post is for anyone developing, deploying, or integrating agents. I hope this post is outdated in 2 months.

design patterns for agents

We’re defining agents as software that navigate and take action on your behalf. There are a couple of core properties of these agents that make them suited for different types of work.

Nearly 0 cost of labor

Smart given the right context

Right most of the time

GENERAL

#1 agent as associate

Doing the grunt work before creation

The collective imagination has fixated on the idea of AI doing net new, generative work, when in reality, agents are best at everything leading up to that. It’s useful to think of agents as associates: querying for information, translating to an optimal format, and doing initial reviews based on given criteria.

Mimicking Bloom’s Taxonomy, there are a multitude of ways value can accrue before letting an agent run rampant on your behalf. For example:

Remembering - Fuzzy queries searching for the most recent contributor

Understand - Summarizing long work orders into a succinct document

Apply - Prioritizing work in to-do list

Analyze - Comparing long electrical standards documents

Evaluate - Assessing quality of work against explicit criteria, eg. DFM

This helps teams maximize value while minimizing immediate risk.

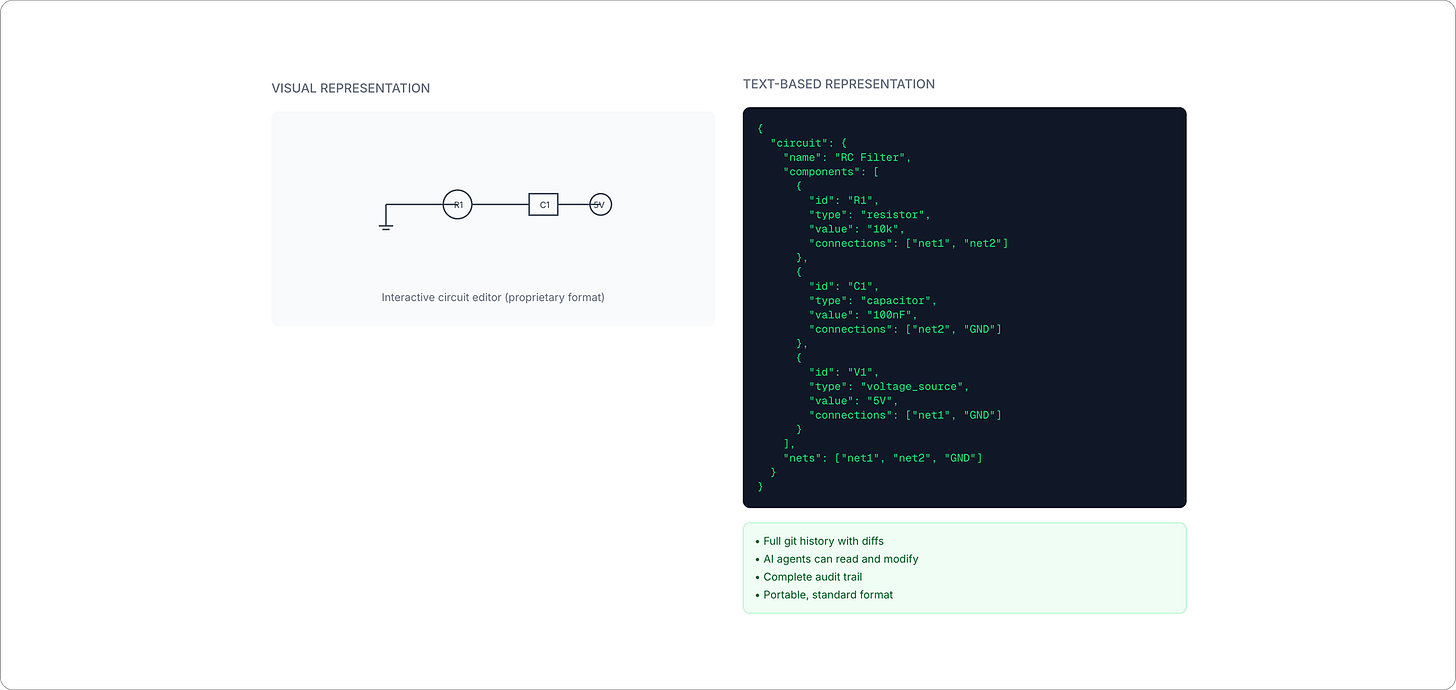

#2 flatten every system into text

Agents are at their best when the entire state of a system is expressed as plain, structured text. This explains why code was the first environment where agents made meaningful contributions.

Being able to diff everything makes it auditable and more trustworthy. Some patterns in other domains:

Mechanical - CAD assembly tree exported as JSON

Electrical - Netlist or new representations like TSCircuit

Video editing - Final Cut XML timeline(?)

Mapping - GIS or GeoJSON

I would not be surprised if there were domain specific “state files” for every system: people, governments, supply chains, etc.

INPUTS

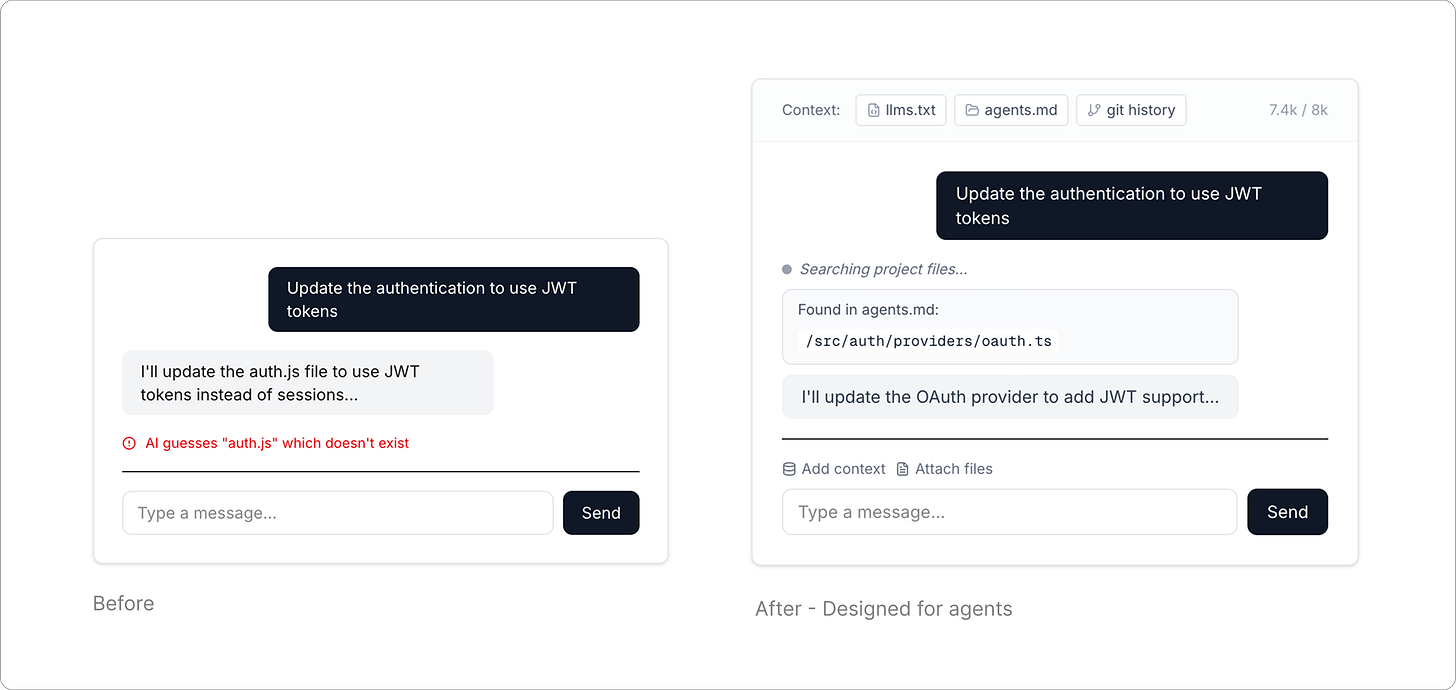

#3 giving LOTS of the correctly formatted context

Most hallucinations now happen because the agent doesn’t have the right information — it either never saw it or forgot. Many optimizations, for example:

Providing the map - Using llms.txt or agents.md to direct your agent to the right files or directories. Getting LLMs to list a record of work after generation for future agents to reference

Tools - Having verbose (but clear) tool titles and descriptions

Augmented context - Turning a screen recording into text, using MCPs to give access to apps to see whole configs

Labelling - Prefacing sections in prompt with “This section is:”

Formatting - Using markdown

Tools like Context7 and Firecrawl make it this more possible.

#4 giving the agent ways to fail

The computer is so earnest, it will try its best even if the scope of the task is completely incorrect. Giving the agent ways to admit defeat or elicit more information prevents these errors for accuracy sensitive lasts.

#5 and fail quickly

“If you’re going to be wrong at least fail fast” - Anirudh

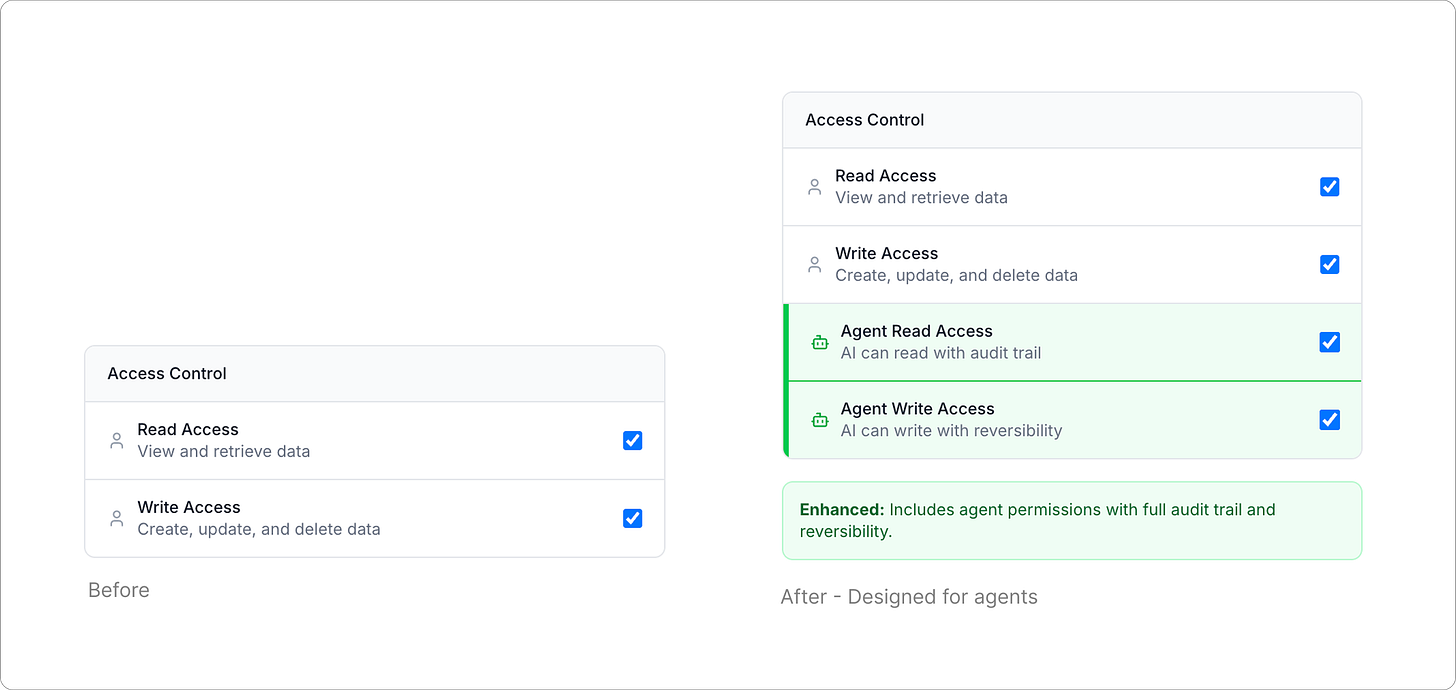

#6 giving special permission scopes

This is for third-party apps looking to allow agents on their platform. Keys and scopes for API keys, designated specifically for agents. Used to provide more granular audit trail or reversibility.

OUTPUT & VERIFICATION

#7 forced structured outputs

Deterministic data

For downstream systems to trust an agent, every response should clear a schema gate, validated through Zod, Pydantic, or other strong typing layer. Only then are we able to generate dynamic UI or even output citations3

#8 agent as verifier

After producing an output, use other agents to eval.

This can be as simple as a chained tool call with boolean output y/n of whether or not it answered the question. In a more robust implementation, could be a supervisory agent like with ChatGPT Agent. It feels similar to giving the agent smart co-workers.

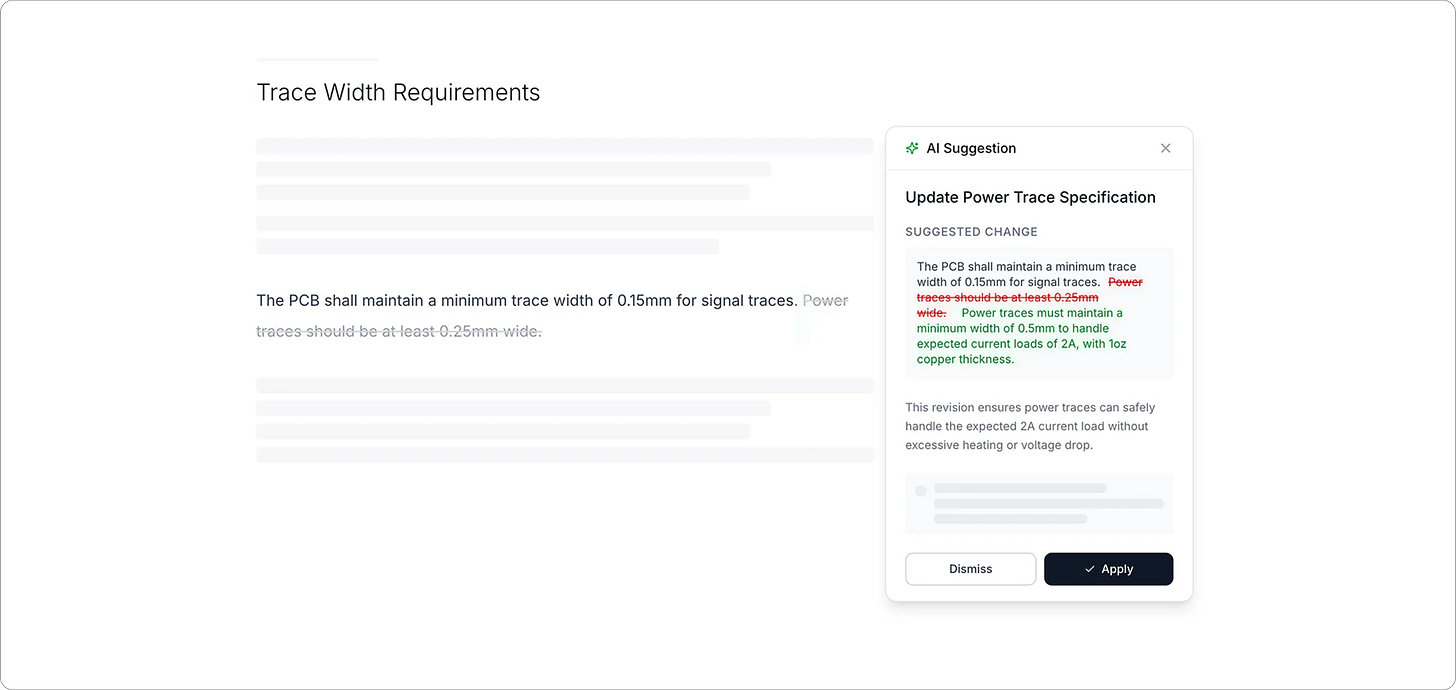

#9 human as final gate

90 % machine, 10 % human sign‑off.

Even if every decision an agent makes is 99% correct, it will become so inaccurate it is useless for long chains of action. In cases where that is absolutely required, smooth UX for human verification defines product quality: inline diff views, one-click approvals, or flags for risky actions.

#10 insurance

Progress needs security. In the march for new technologies we can praise the cowboy scientists and engineers, but we can’t ever forget the insurers! For example, Philadelphia’s growth was largely enabled by Ben Franklin starting America’s first fire insurance company.

Companies will need protection for accidentally producing copyrighted material, accessing sensitive materials, novel security exploits, and more.

fini

I would like for the technology we make to help lots of people and industries. Reach out with thoughts / disagreements!

Thoughts in this piece co-developed with Hudzah. With input from Anirudh, Brian, and JM.

Part of it is that demos + pitches test demand for a possible future instead of show the present, and then build the backfill development to deliver on the promise.

I think I’m addicted to technology that feels like magic. I felt it for the first time with AfterEffects and feel it every week now have been beat over the head with this feeling weekly as new AI models come out.

Even if users don’t click into citations, it forces the model to ground outputs in real data. Shocking to me that outputs from top APIs are still picked out by parsing Japanese brackets【 】.